Image Credit: OpenAI.com

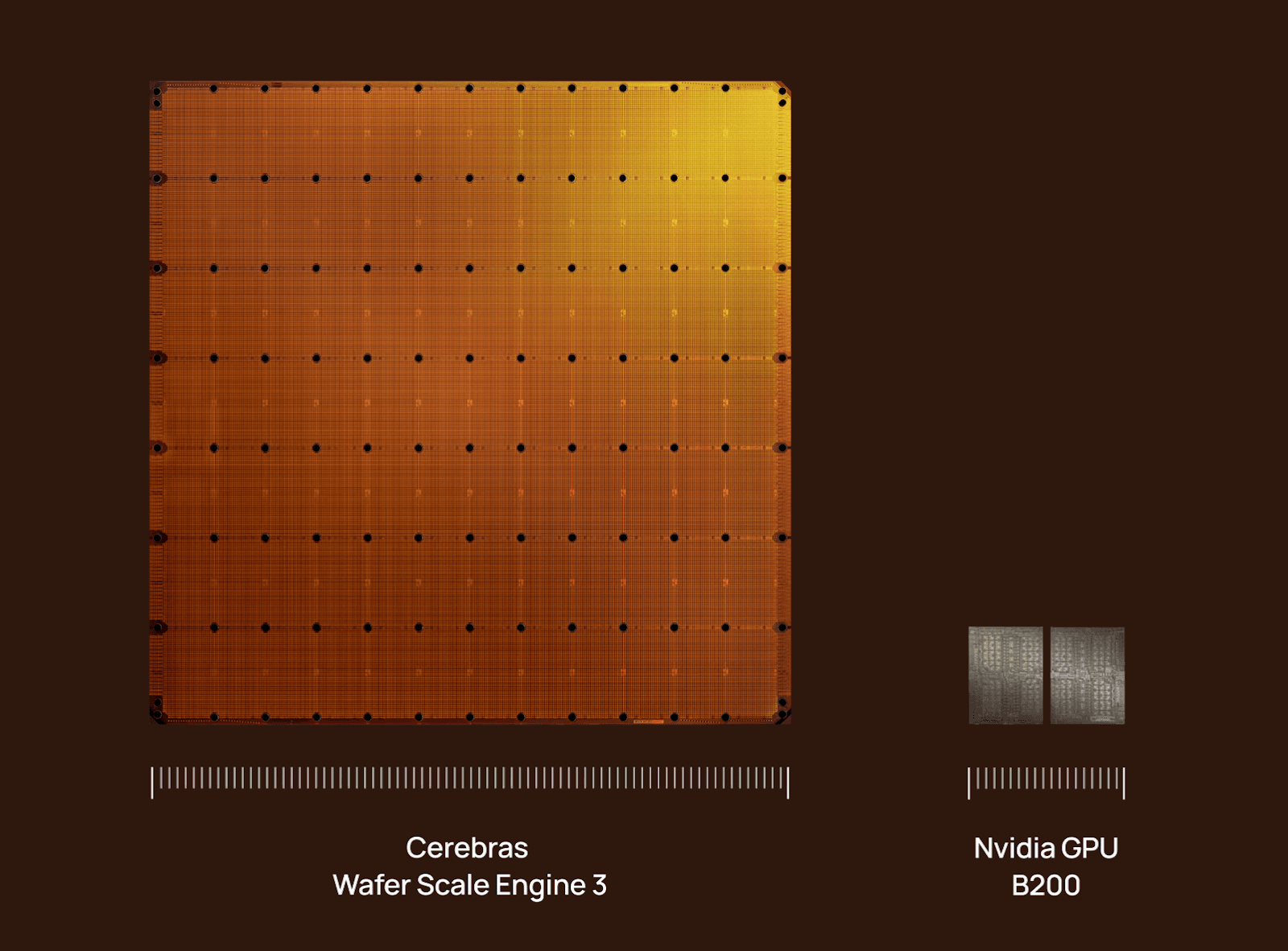

For much of its history, Cerebras has been one of the most ambitious and polarizing names in AI hardware. Its wager was radical: instead of stitching together ever-larger GPU clusters, build one giant wafer-scale processor and let models run without the communication bottlenecks that plague distributed systems.

We previously looked at how that architectural bet stacks up against Nvidia’s GPU ecosystem in “Cerebras vs. Nvidia: Who Leads the AI Compute War?” What’s changed since then is not the theory, but the business reality.

Between late 2025 and early 2026, Cerebras landed a marquee customer, accelerated revenue growth, and entered reported talks to raise capital at a $22 billion valuation, all while preparing for a potential 2026 IPO. Wafer-scale is no longer just a technical curiosity, it’s becoming a capital-markets story.

1. The $10 Billion Validation: The OpenAI Deal

In January 2026, reporting revealed that OpenAI had agreed to purchase up to 750 megawatts of compute capacity from Cerebras in a multi-year deal valued at more than $10 billion, with deployments extending through 2028.

This wasn’t a pilot or a limited experiment. It was an infrastructure-level commitment.

The significance goes beyond the headline number:

Validation at the highest level: OpenAI is the world’s most demanding buyer of AI compute.

Revenue visibility: multi-year capacity contracts reduce volatility and strengthen IPO-era narratives.

Customer diversification: Cerebras had previously faced criticism for relying too heavily on a single major customer. The OpenAI deal materially changes that picture.

Image Credit: ft.com

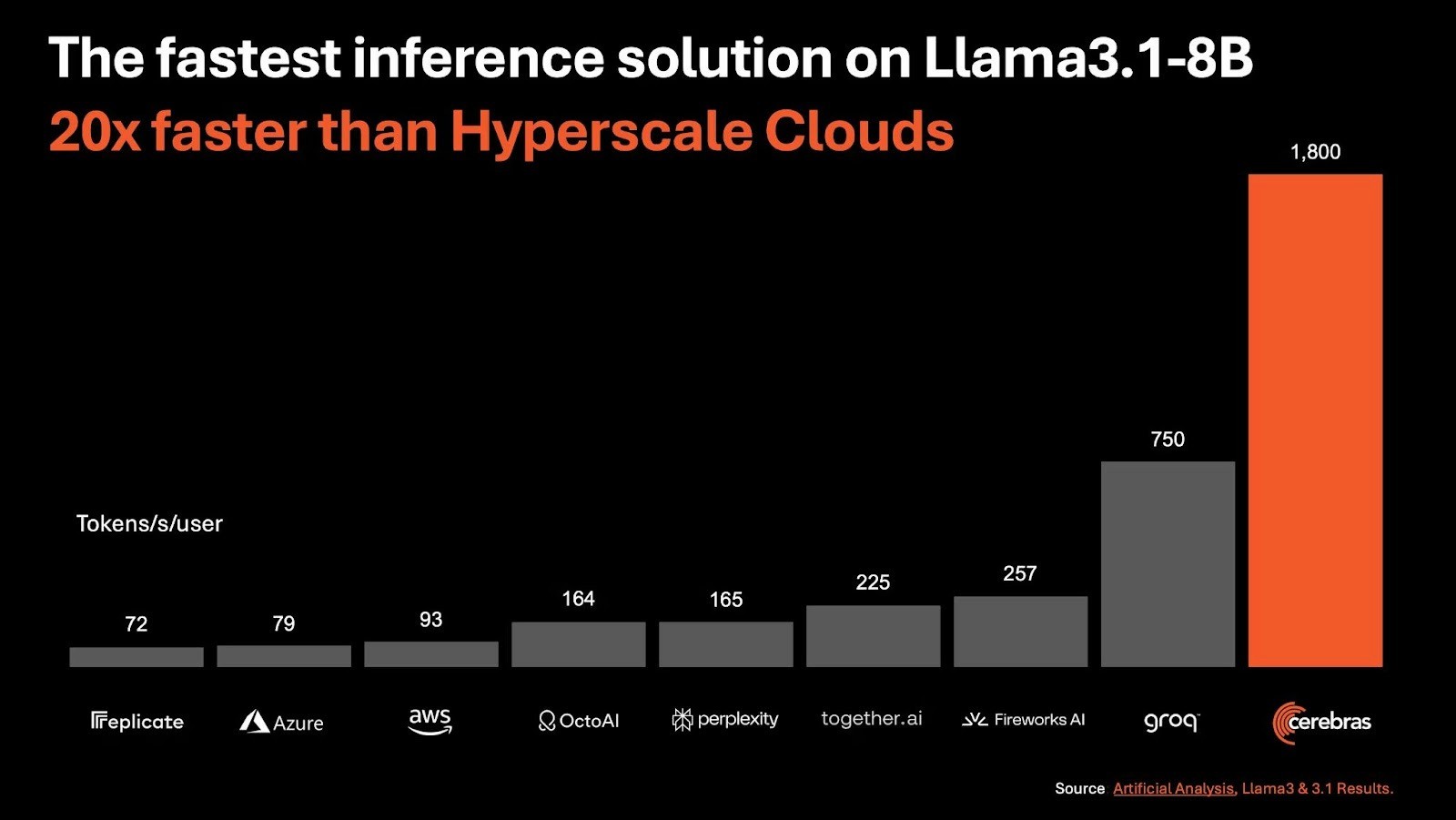

2. Performance Claims: Tokens per Second Winner

In independent benchmarking reported by Artificial Analysis recently, the Cerebras CS-3 achieved approximately 2,500–2,700 tokens per second running inference on Meta’s 400 B-parameter Llama 4 Maverick model, compared with roughly 1,000 tokens per second on an Nvidia DGX B200 Blackwell GPU cluster in the same test.

These figures are workload-specific, but directionally important. As context lengths grow and inference volumes explode, performance is increasingly limited not by raw compute, but by memory locality and interconnect overhead. This is precisely where Cerebras’ wafer-scale architecture shines, and why OpenAI’s interest is focused on inference, not just training.

Image Credit: Cerebras

3. From customer concentration to customer momentum

Earlier public disclosures made one risk uncomfortably clear: customer concentration. At one point, a single customer accounted for the vast majority of Cerebras’ revenue, a red flag for public investors.

The past two years mark a clear pivot:

Broader adoption across enterprise, research, and platform customers

Expansion beyond sovereign and anchor buyers

A marquee OpenAI commitment that reshapes the revenue mix

Revenue climbed from sub-$100 million levels in 2023 to hundreds of millions by 2024, and private-market modeling increasingly points to a credible path toward $500 million to $1 billion+ in annual revenue as large inference contracts ramp up.

That scale is what turns technical differentiation into an IPO-grade story.

4. Cerebras vs. Nvidia, revisited

Nvidia still dominates AI compute through software ecosystems, developer mindshare, and full-stack platforms. Cerebras isn’t trying to replace that dominance overnight. Instead, its strategy is narrower and more surgical:

High-throughput inference at scale

Simpler scaling for giant models

Reduced interconnect complexity

Image Credit: Cerebras

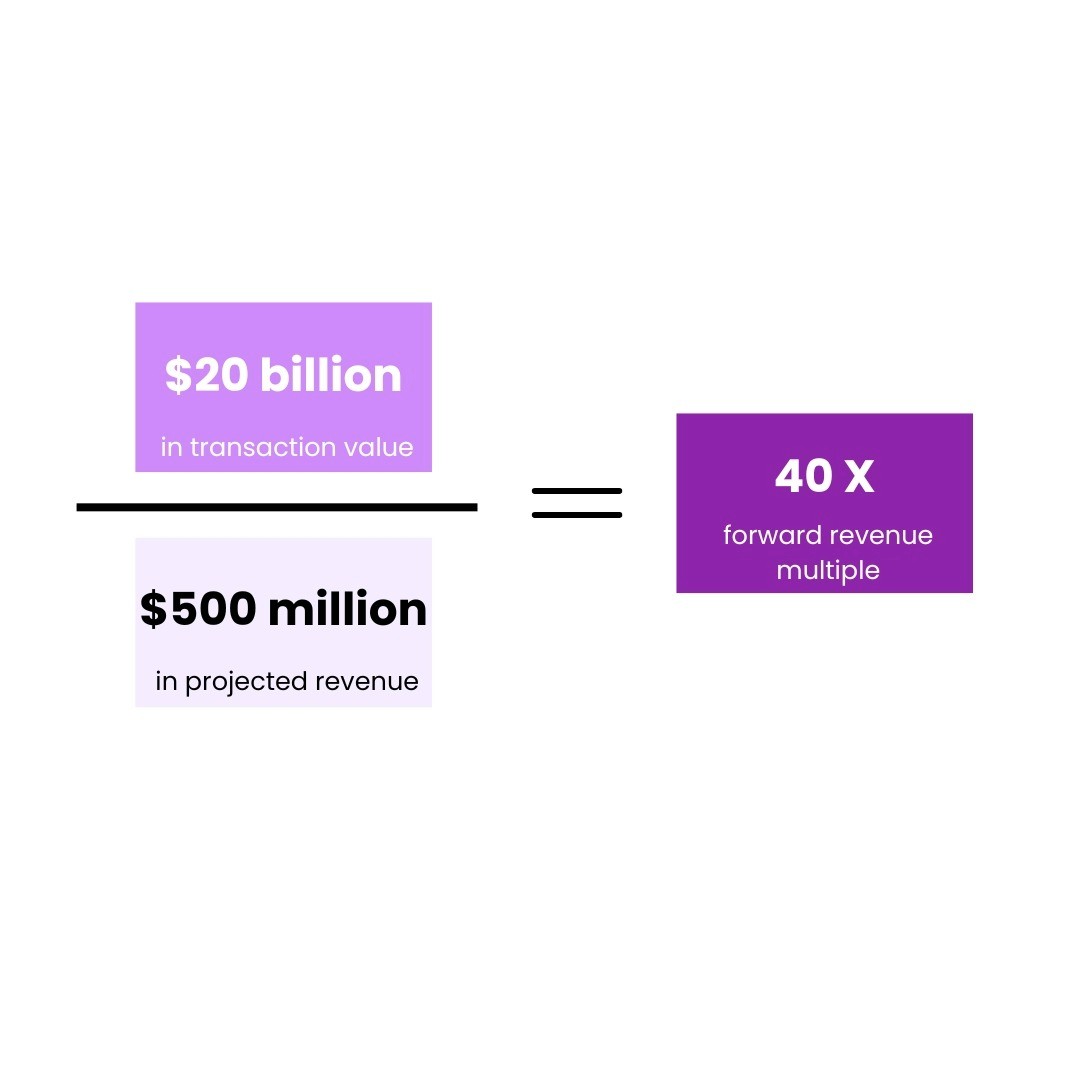

Ironically, Nvidia reinforced this framing itself. By agreeing to a roughly $20 billion deal involving Groq, Nvidia signaled that inference-specific differentiation is strategically valuable enough to command enormous premiums. That transaction changed how the entire market thinks about inference accelerators.

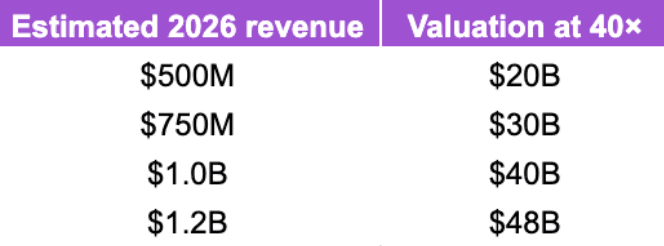

5. The Groq multiple - and what it implies for Cerebras

Public reporting around Nvidia’s Groq deal implies:

~ $500 million in projected revenue

~ $20 billion in transaction value

≈ 40× forward revenue multiple

That multiple would be extraordinary for traditional semiconductors, but inference infrastructure is being valued more like strategic software platforms. If Cerebras were valued on similar terms, the IPO math becomes below:

Copyright © Jarsy Research

For context: Cerebras last raised at ~$8.1 Billion in late 2025. It is reportedly in talks to raise capital at ~$22 Billion. An IPO is widely rumored for 2026.

6. What Comes Next for Cerebras

As Cerebras enters its next phase, the debate has shifted from whether wafer-scale computing works to whether it can be deployed reliably and repeatedly at scale. The OpenAI agreement offers a real-world test of performance, operations, and integration across large inference workloads.

What matters next is execution: how smoothly systems are delivered, how they perform in production, and how broadly the approach resonates beyond a single flagship customer. For the first time, Cerebras is being evaluated not just as a bold hardware innovator, but as a core piece of AI infrastructure.

Further reading: Introducing Cerebras Inference; Cerebras CS-3 vs. Nvidia DGX B200 Blackwell, Cerebras & OpenAI Deal; Cerebras Potential IPO